(sorry if you got this twice in your RSS, I hit the “publish” button too early…)

A while ago I decided to make an automatic serializer that works just like the XmlSerializer but for the SDL file format, since I like the simplicity and elegance of this data language. The XmlSerializer also doesn’t work natively with Dictionary objects, and crashes when used with certain visibility combinations and C# 3.0 auto-implemented properties.

Making a serializer for any language implies heavy use of reflection to determine the structure of what you’re reading or writing to or from a data file, but also to invoke the getter/setter of the fields you’re serializing.

Performance considerations

Some reflection operations come at a heavy performance cost. Not all of them though! This 2005 article in MSDN Magazine explains that fetching custom attributes, FieldInfo/PropertyInfo objects, invoking functions/properties and members and creating new instances are the costliest operations. Well that’s a problem, because all of those will be handy when writing our serializer.

The same article continues by showing which are the slowest method invocation techniques. The speediest technique are direct delegate use, virtual method calls or direct calls, but those are impossible to use if all you’ve got is a Type and an Object. The next best thing is using a DynamicMethod object, IL emission and a delegate. Having never used IL before, I didn’t grasp all of that, but thankfully there are many other resources concerning the use of DynamicMethod out there.

A post on Haibo Luo’s blog from 2005 makes a performance comparison between Activator.CreateInstance() (by the way, doing “new T()” with a generic type parameter that’s constrained as “new()” is the exact same as calling this method) and various other techniques including DynamicMethod and using it as a delegate. This last technique blows the rest out of the water in terms of speed.

This GPL library on CodeProject written by Alessandro Febretti provides an excellent dynamic method factory. And this other article on CodeProject goes a bit further and shows how to set/get values on fields, and isolates the boxing in helper functions.

What I ended up doing is taking from all of these examples, correcting the problems outlined in the comments of both CodeProject samples, and I built a IReflectionProvider interface that publishes all these costly operations and which can be implemented three different ways :

- DirectReflector : Simply via reflection

- EmitReflector : With IL emission but no caching performed (the DynamicMethods and delegates are rebuilt on each call)

- CachedReflector : With IL emission and caching (the resulting delegates are created only once, then accessed with a dictionary lookup)

I’m aware that the 2nd test case is ridiculous, you should never emit IL and generate methods at runtime and repeatedly, but I wanted to outline the importance of caching.

The serializer

When making this sample, I wanted to both provide a fast .NET reflection library as well as a proper generic implementation of a reflective serializer. But I didn’t want to spend time on string parsing/formatting, since serializers usually output a text file or a certain data format. So the tradeoff I chose is somewhat unusable in the real world…

It outputs objects which are a generalization tentative of all .NET objects. There are three main categories :

- SerializedAtoms are indivisible, single-valued and immutable. All primitive types will serialize to atoms, in addition to strings, enums and nullable types.

- SerializedCollections are multi-valued object bags that don’t give a specific meaning to keys or indices other than natural ordering. All classes that implement ICollection<T> will serialize into this.

- SerializedAggregates are multi-valued object maps that use the key or index for indentification. All of which doesn’t fall in the two other categories will serialize to aggregates, so Dictionaries and just any other class.

Only atoms contain actual values, but it contains them as an object. There is no string conversion done in the end, it all remains in memory. Serialized objects also retain the name of their host field or dictionary entry if any, and the runtime type if different from the declared one.

To customize the serialization output to an extent, I made a custom attribute called [Serialization] which allows to force an alternate name to a serialized member, mark a member as ignored by the serializer, or mark it as required. I could’ve used “optional” instead, but I find it more logical to skip serialization of all null or default-valued fields.

Just like the XmlSerializer, it only serializes the public instance fields or properties. So unlike the BinaryFormatter (which is deep serialization), my serializer does shallow serialization.

I have tested the implementation with many (if not all) combinations of value-type/class, serialized object category and visibility, so I can say it’s pretty robust and tolerant on what you feed it.

Results

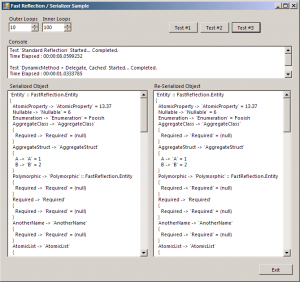

This is the whole point… how fast does “Fast .NET Reflection” go? Here are the timings for 10 outer loops (so 10 serializer creations) and 100 inner loops (100 serializations per outer loop), which means 1000 serializations or the same complex aggregate object.

Test ‘Standard Reflection’ Started… Completed.

Time Elapsed : 00:00:08.2473666Test ‘Reflection.Emit + Delegate (No Caching)’ Started… Completed.

Time Elapsed : 00:01:52.4517968Test ‘DynamicMethod + Delegate, Cached’ Started… Completed.

Time Elapsed : 00:00:00.9970487

Well, I did say that no caching was a very bad idea.

Still, the highlight here is that by running the same serialization code with two different reflection function providers, using dynamic IL methods and a healthy dose of caching is eight (8!) times faster than using standard reflection.

Sample code

The code for this sample (C# 3.5, VS.NET 2008) can be found here : FastReflection.zip (46 Kb)

Even if you’re not interested in serialization, I suggest you take a look at the EmitHelper class and how it’s used in CachedReflector. All tasks that need Reflection in a time-critical context should use dynamic methods!