I’ve decided to repost all my remaining TV3D 6.5 samples to this blog (until I get bored). These are not new, but they were only downloadable from the TV3D forums until now!

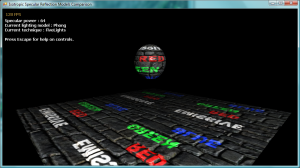

This demo (originally released as VB.Net 2005 on Feburary 13th, 2007 here) is a visual and performance comparison (and reference implementation) of five different per-pixel lighting models for isotropic specular reflections :

- Phong reflection model

- Blinn-Phong (Blinn D1, Phong) specular distribution

- Lyon halfway method 1 (for k=2 and D = H* – L)

- Trowbridge-Reitz (Blinn D3) specular distribution

- Torrance-Sparrow (Blinn D2, Gaussian) specular distribution

My main goals were to :

- Make an optimized HLSL implemention of each model that fits in a single Shader Model 2.0 pass and supports 3 lights

- Evaluate the performance of each model in a multiple light, per-pixel rendering context

- Determine which model keeps the most numerical precision and does not produce artifacts when used with normal-mapping

Download

IsotropicModels.zip [2.7 Mb] – C#3 (VS.NET 2008, TV3D 6.5 Prerelease .NET DLL Required)

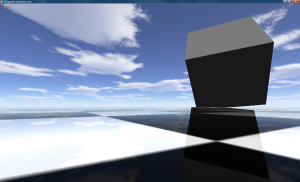

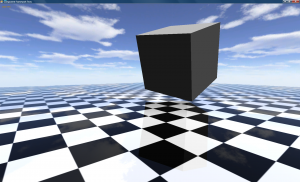

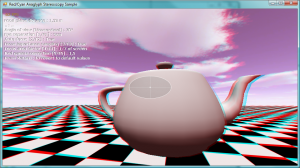

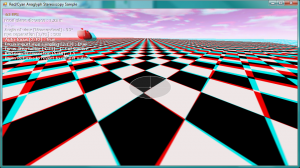

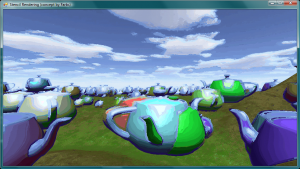

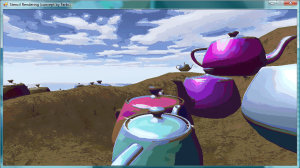

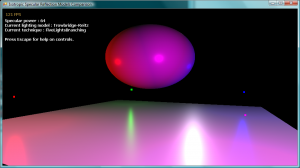

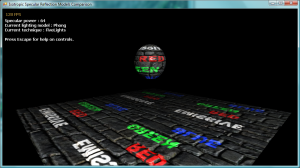

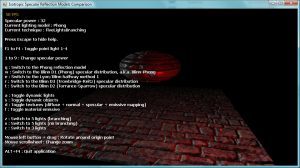

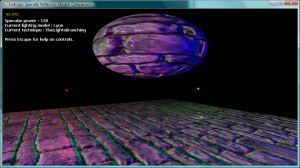

Screenshots

Details

The HLSL shader supplied with this sample was made to mimic the built-in TV3D offset-bumpmapping shader as closely as possible. As a result, almost all of its effect parameters are mapped to standard semantics. It supports :

- One colored directional light

- Two colored point lights in SM2.0, and four in more recent models (SM2a/b and SM3)

- Support for all types of vertex fog

- Parallax mapping of texture coordinates using a grayscale heightmap

- Diffuse mapping with alpha support (a.k.a. texturing)

- Normal mapping

- Specular mapping (using the alpha channel of the normalmap)

- Emissive mapping (colored!)

- Usage of all material terms (diffuse, ambient, specular, emissive, power and opacity)

There is no support for point light attenuation as this would’ve gone over the 64 instructions limit of the ps_2_0 profile. (also TV3D doesn’t provide semantics for these)

There is no support for spot lights for the same reasons, but I believe spots will be processed as regular point lights, ignoring the specific parameters.

Techniques

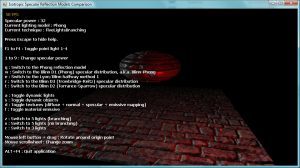

With the realtime controls, you can choose from three different techniques : FiveLightsBranching, FiveLights and ThreeLights. On SM2.0 hardware, only the third option will be valid.

The FiveLightsBranching mode uses loops and “if” statements to produce dynamic branching on SM3.0 compatible hardware. This can (but may not) be benificial because only the calculations for enabled lights are performed.

The FiveLights and ThreeLights modes respectively do five and three lights (WHAT YOU SAY !!) but all in a static manner. It does not just unroll the loop! Most of the calculations are done with matrices, which makes it more efficient on most hardware.

To keep the shader “simple” (or to prevent from becoming even more complex…) I decided not to implement a multipass 5 lights technique for SM2.0… sorry!

Blinn vs. Phong

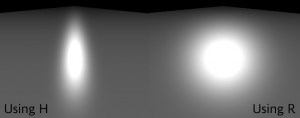

There’s two major categories in the models I tested : the ones that use the halfway vector, and the Phong model that works with the reflected vector. (see the Wiki entry on Blinn-Phong for details on these vectors).

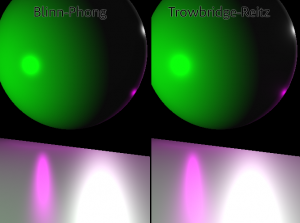

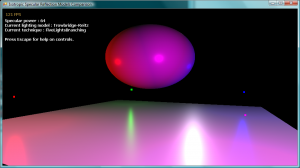

A directional light reflecting on a surface with a power value of 64

According to a paper from Siggraph 2004 called Experimental Validation of Analytical BRDF Models, the halfway methods generate specular highlights with more realistic shapes than the Phong model. I realized that myself when working on an ocean rendering shader that had a Phong specular reflection, and it was impossible to get a long grazing highlight when the sun was setting.

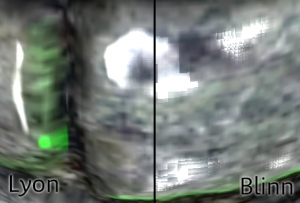

Normal Mapping Artifacts

One of the things that made me do this whole analysis is that I was dissatisfied with the image quality of Phong and Blinn-Phong when used with a normal map, so per-pixel lighting. I had huge block artifacts on my water surface, and everything with a high enough specular power value and a bumpy surface. So I found about a reformulation of the Blinn-Phong model by Richard F. Lyon, written in 1993 (!) for Apple. (trivia : Mr. Lyon also invented the optical mouse… how awesome is that!)

This reformulation is interesting because it does not use the specular power literally as an exponentiation, it uses a distance metric and a much lower power value to produce very similar results to the Blinn-Phong model. Using a high specular power (32 or more) hurts floating-point accuracy, even in full-precision mode.

That said, I have seen hardware that do not have this problem. I am starting to think that it may be a driver issue, or something about mobile GPUs… In any case, the safe thing to do is choose the model that never produces artifacts, right?

The Other Blinn Distributions

The two other models I implemented (Trowbridge-Reitz and Torrance-Sparrow) were “ported” from the MATLAB code in Lyon’s reformulation paper. I wanted to test them out to see if they had the same artifact problems, and how different they looked from the classic models.

Trowbridge-Reitz is an interesting model because of how it looks. It’s slower than Blinn-Phong, but it has a distinct smoothness to it. The falloff of its specular highlights is softer than the other models… I’m not sure if it’s more accurate, but it looks pretty. Sadly, it has the same problems with normal mapping.

Torrance-Sparrow is a visual identity to the Blinn-Phong model. It’s the same thing, but slower and more instruction-heavy. It does not even fit in SM2.0 with 3 lights,… So I suggest you disregard it for realtime graphics.

Performance

I found that performance varies a lot depending on which technique you use, which shader model you support, how much your GPU is fillrate-limited instead of arithmetic-limited… So I’ll just say this : the Lyon model looks great, and it’s simple and fast enough to be worth considering. If you don’t experience the artifacts I describe, then the Blinn-Phong model is your best shot, but test Trowbridge-Reitz to see if it’s fast on your hardware.

It’s also worth mentioning that many things could be optimized by factorizing equations into small 1D or 2D textures (or perhaps a normalization cubemap), if your GPU loves pixels and hates instructions. But I don’t believe that the shader can be optimized that much by reorganizing code or removing useless statements. At least not without hurting visual quality.

Component System Updates

This sample contains a major breaking change to my component framework : The Service baseclass is gone. This makes Components able to “be” services (and publish many service interfaces), and allows this sample to have a much simpler class structure… no more state classes! The components just publish whatever data they want via their service interfaces. And with the new Eventful<T> class, it’s really easy to propagate changes from a controller to a view.