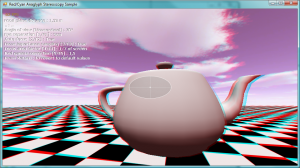

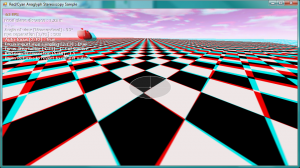

I decided to finally finish up my analgyph stereoscopy sample, and cut the depth-of-field component that was adding too much complexity for my limited spare time right now.

Download

Binaries : stereoscopy_bin.zip (1.9 Mb – Binaries)

Code : stereoscopy_src.zip (524 Kb – Source Only, get DLLs for TV3D and SlimDX from the Binaries)

Description

This sample demonstrates a couple of things :

- Optimized anaglyph filters for red/cyan stereoscopic rendering, to reduce eye-strain by minimizing retinal revialry but still keep some color information. As my source suggests, I’ve also implemented red channel gamma correction in the shader.

- Auto-focus of both eyes on a focal plane. The camera is in the first person and the two “virtual cameras” act like human eyes, in the manner that they are connected to a single brain that wants to look at a single point. So the center of the screen is assumed to be that focal point, and both eyes will look at it. I used a depth rendering pass to achieve this, and a weighted sum of a certain portion of the screen near the center (this is all tweakable in realtime)

The distance between the eyes is also tweakable, if you want to give yourself a headache.

I wanted to do a stereoscopy sample to show how I did it in Super HYPERCUBE, but I can’t/don’t want to release its source, so I just re-did it properly. Auto-focus is a bonus feature that I wanted to play with; sHC didn’t need that since the focal plane was always the backing wall.

This sample, like all my recent ones, uses the latest version of my components framework. There are some differences between this release and the Stencil Rendering one, but it’s mostly the same. The biggest thing is that base components (Keyboard, Sound, etc.) are not auto-loaded anymore, and must be added in the Core.Initialize() implementation. This way, if I don’t need the sound engine, I just don’t load it… makes more sense.

Hope you like it!

Hi,

You should not force a focus point. With one, several object at the same depth will look like on different planes and the headache arise. Instead, you jut have to set up two parallel camera, the effect will be far more credible and the viewer will be free to focus anywhere in the picture.

Really? But if one is to mimic exactly how eyes work, doesn’t it make sense to use a focal point?

I thought that the headache problem was due to the lack of depth-of-field, which eases out shapes that are out of focus. Also, as long as you don’t use headtracking, there’s conflicting depth and perspective perceptions…

But I was pretty sure that to have a lifelike simulation, you need a focal point.