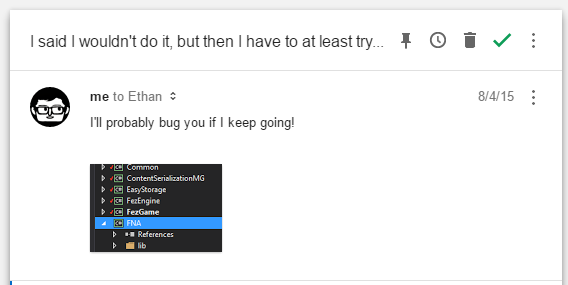

A bit more than a year ago, I sent this email to Ethan “flibitijibibo” Lee :

And work on FEZ 1.12 officially started.

The goal of this large update to the Windows PC/Mac/Linux version of FEZ was the following :

- Cut dependencies to OpenTK, the platform framework used by FEZ on Windows. I have had problems with it from the start, from sound card detection issues to windowing problems, to VSync and fullscreen issues… I wanted to give SDL 2.0 a shot, to see if it fares better.

- Have more efficient music streaming. PC + Mac versions of FEZ used a C# Ogg Vorbis decoder called NVorbis, which seemed like a good idea because it would run on all platforms. I also wrote the streaming code that uses NVorbis and OpenAL, and it made its way into the main MonoGame repository! But it’s also very slow, resource-intensive and heavy on disk access. So I wanted to look into a better solution that wouldn’t break music playback in areas like puzzle rooms and the industrial world.

- Have a single codebase for all PC + Mac versions of FEZ. As it stood with 1.11, there was a slightly modified codebase for Mac and Linux that ran on a weird hybrid of MonoGame and what would become FNA, called MG-SDL2. The PC version ran on my fork of MonoGame ~3.0 which I did not do a great job of keeping up to date with upstream changes, because when I did it usually broke in mysterious ways. This is not great for maintenance, and centralizing everything on a clean FNA back-end, with as little platform-specific code as I could, seemed like a good idea.

- Make it the Last Update. Since I shipped FEZ 1.11 I had little intention of making additional fixes or features to the game because I simply don’t have the time with a kid and a fulltime job… and working on FEZ is getting old after 9 years. So I did want to address problems that people have with the game, but I don’t want to do it for the rest of my life. I had spent enough time away from the game that I was somewhat enthusiastic about coming back to it, especially if it’s at my pace, and that it’s my last time doing so.

So I didn’t announce anything, I didn’t announce a date, and I slowly chipped away at making this humongous update to FEZ. It’s been in beta-testing with an army of fans, speedrunners and friends since late January 2016 and over 120 bugs have been reported and fixed.

You can read the full 1.12 change log here (it’s also bundled with builds of the game), but I wanted to cover in more detail a few big changes that caused more headaches than I had anticipated.

Singlethreaded OpenGL

FEZ uses a loading thread so it doesn’t block the draw/update loop while levels are loaded. This loading process includes file loading, but the bulk of load times are spent interpreting loaded data : building models, uploading textures to GPU memory, creating shaders, calculating helper structures for collision, etc.

The original XNA version of the game did everything on the loading thread and DirectX 9 somehow made sure that everything would be just fine, even if calls to the graphics driver were made on a thread that wasn’t the main thread.

From version 1.0 to 1.07 of FEZ (PC/Mac/Linux), I used the background OpenGL context that MonoGame provides, so that I didn’t have to retool all my loading code, or slow everything down by synchronizing to the main thread on every GL call. This worked fine… mostly, depending on the driver, on some platforms. My development setup worked pretty good, but I had reports of awful load times on Mac, and on AMD graphics card; clearly this wasn’t good enough.

In FEZ 1.08, I got rid of the background context in favor of a call queue for GL operations that could wait until the next draw to be done (in order), and blocking GL calls for the ones that needed to be done instantly. This was minimally invasive and worked pretty well, but slowed down load times for setups that ran fine before. Also, what is considered a GL call or not is not known or defined by FEZ at this point; MonoGame internally does a check whether we’re on the main thread for every XNA call that involves GL, and if so, uses a closure to defer the call to the main thread (usually by blocking). This was good enough, but not great.

When switching to FNA, I wanted to take advantage of its “Disable Threading” mode which boosts performance and lowers GC strain because it doesn’t need to check whether you’re on the main thread on every graphics function; if you guarantee that you’ll never, ever use them on a secondary thread, it takes your word for it! This means that FEZ would need to do deferral/blocking itself. The loading code had to be retooled and verified throughout the game.

I ended up using a simple method very similar to what MonoGame did : the Draw Action Scheduler. I got rid of the “I need this now!” blocking calls (e.g. loading the sky texture and then instantly after, sampling its pixels for the fog color), and made sure that FEZ could load and process everything on its loading thread before the first draw could be executed, which unqueues and executes these draw actions. To keep the smoothness benefits of having a loading thread, I had to tweak granularity; sometimes it’s better to have a bunch of smaller actions that can be run while the loading screen renders, instead of having one big task that causes lost frames.

Here’s a fun one : I didn’t want to change FNA’s code, and I wanted a Texture2DReader that’s safe to call on a loading thread… so I wrote a FutureTexture2DReader that does file reading inline, but then lets you upload the texture to GPU in a second step :

var futureAtlas = input.ReadObject

DrawActionScheduler.Schedule(() => existingInstance.TextureAtlas = futureAtlas.Create());

I also realized that there are a lot of hidden GL operations here and there, that only happen in some circumstances, and that can blow the game up big-time if you’re not careful. There’s no safety net in FNA’s no-threading mode, so you have to be really confident that it’s 100% covered. I’m pretty confident, after a year. :)

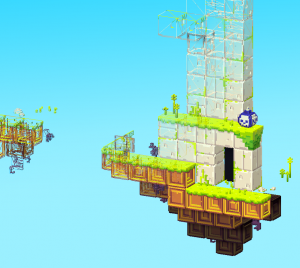

Screen scaling modes

The saga of FEZ resolutions and black-bars is hard to justify. The excuses have varied from “it was meant to be run in 720p, so we only offer multiples of that” to “okay I guess we can do 1080p but it won’t look great” to “okay I guess we don’t actually have to pillarbox, but sometimes we’ll still letterbox”.

I think most people will be happy with the implementation we chose to go with in 1.12 :

- No more black bars. The handful of situations where black bars were still required (mostly because I had been lazy and assumed a 16:9 aspect ratio) have been reworked. The only downside is that you might see the vertical ends of a level if you try hard enough, but it’s worth the presentation overhaul. One sensible exception : if you use a resolution that does not match your display adapter’s aspect ratio in fullscreen, the game auto-detects it and adds black bars so that the game does not appear distorted.

- You choose the scaling mode. Not literally 1x/2x/3x because specific levels have control over that, but you choose whether you want the intended zoom level, prefer pixel-perfect scaling (which may cause a wider-than-intended zoom), or want to compromise with a supersampled view at the intended zoom level. The latter option is my favorite because it has no impact on pixel-perfect resolutions (like 720p and 1440p) but, for instance, will render with a 1440p backbuffer in 1080p in order to provide minimal pixel crawl/jitter… and provides anti-aliasing in first-person mode and whenever rotating objects are used. It’s a bit softer so people might prefer not to use it; but it’s an option!

Visual interpolation between fixed timesteps

Let me tell you the story of a .NET API misunderstanding that has deep consequences…

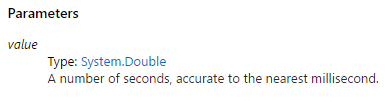

Let’s say you use the TimeSpan.FromSeconds() factory method to make a TimeSpan object that represents the duration of a 60hz frame. You’d use it like this :

var frameDuration = TimeStep.FromSeconds(1 / 60.0);

And I wouldn’t blame you for it. The method takes a double, there’s no indication that it would do any kind of rounding… but if you read the documentation :

It’s not because TimeSpan’s precision stops at milliseconds, it store everything in ticks. It makes no sense. But here we are.

For the 5 years of its development, FEZ was designed with a 17ms timestep because of this issue. The physics were tweaked with a 17ms fixed timestep in mind, and yep, it skipped frames like it’s nobody business. Because it ran at approximately 58.8235294 frames per second, instead of the intended 60.

To help with this in FEZ 1.08 (PC/Mac/Linux), I decoupled the update and draw calls such that more than one draw can be done for one engine update. This eliminates tearing with a 60hz V-Sync, but once or twice every second, the same frame is presented twice in a row to the screen, which makes the game feel jittery. It was relatively minor, so I let it slide.

Fast-forward to 1.12, in which I decide to try and support my fancy new 120hz monitor properly. Drawing frames twice isn’t exactly great, it does make the game match the monitor’s synchronization but it doesn’t look any better than 60hz. Then Gyoo is play-testing the game and notices the original issue, that the game jitters even at 60hz… and it sinks in that the same root cause is making the game locked at an update framerate that doesn’t make any sense. I have to do something about it.

There’s two ways to go here, and both are painful : interpolate, or switch to a variable time-step. I already had tried the latter option when still developing the game for Xbox 360 and it’s very hard to pull off. The potential for hard-to-reproduce physics bugs is real, and it would mean retesting the whole game many times until we get it right. However, some parts of the game are easy to transition to a variable timestep in isolation since they don’t depend on the game’s physics, or have no impact on gameplay. So I went with a hybrid solution :

- Gomez uses interpolation. This means that for every update, the next frame’s position is also computed, and when Gomez gets drawn, he gets interpolated to the right position between those two frames depending on timing.

- The camera, sky, moving platforms, grabbed/held cubes and cube bits uses a variable time-step. These could relatively easily be transitioned to compute their movement/position per-draw instead of per-update, which was an instantaneous boost to fluidity, especially at framerates higher than 60hz.

- Everything else is still at 17ms fixed timesteps. It turns out that it doesn’t matter for most entities to have fully smoothed movement, especially at that chunky world resolution, and with a fully smooth camera. So I stopped there.

This sounds like a fun time, but I’ve been working on regressions that these changes caused since I started the task back in April 2016. There were a lot of corner cases, places where the camera’s position was assumed to be the same in matching update and draw calls, jittering stars and parallaxed elements and… the list goes on. But the result is there : the game looks really, really good in 120hz right now.

Additional reading

Ethan wrote a whole series of posts during FEZ 1.12 development that covers other things that the patch addresses :

- Controller-related changes and remapping support

- Menus, multisampling, some relatively outdated scaling mode talk

- Sound and music

- Ethan’s version of the 17ms issue

- FNA-related talk

- Misc fixes

Special Thanks

First off, huge, HUGE thanks to Ethan for making FNA in the first place, motivating me to finish this patch, and working tirelessly on it since day one. Your support, help and dedication blow me away. If you appreciate the work he’s put on FEZ 1.12, consider supporting him on Patreon.

And then, the amazingly helpful testers that have been doing 1.12 testing on their free time to help the project, in no particular order (and I hope I didn’t forget anyone!) :

- MistahKurtz7

- Maik Macho

- Evgeny Vrublevsky

- Gimlao

- Yauxo

- Luis F. Correia

- Gyoo

- Juikuen

- Stanisław Gackowski

- Vulajin

Thank you all so much, you made this possible. <3

As you can see, a lot of them are speedrunners. I’m very grateful for the passion of the FEZ speedrunning community, watching things like Vulajin run FEZ at AGDQ last year was amazing; I wanted to make the game solid for you guys!