Here’s a follow-up on a previous post I had made on “Loop Parallelism”.

A faster PersistentThread

In my last post, I wrote a class that keeps a single thread alive in order to re-use it without having to create threads over and over, an operation that sounded like a lot of overhead when you have over 30 updates per second (i.e. in a game loop). However, the benchmarks showed that it was actually slower to use synchronization primitives than to just recreate the threads every time!

It turns out that using a Monitor for the wait/signal operations wasn’t so good. There are lighter primitives in .NET that can be used for that simple operation, where all you need is a boolean semaphore. After researching a little bit, the best object for the job is the ManualResetEvent. This allows you to put a thread in sleep mode with the WaitOne() method, and wake it from another thread using the Set() method.

I ended up using two ManualResetEvents : one for a thread waiting to be started with a new work unit, another to simulate a thread “Join” operation (without actually killing the thread). And the cost of using them is MUCH smaller than Monitors! Here are the new performance numbers :

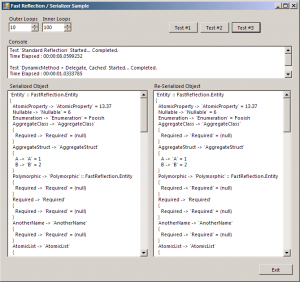

Test ‘Multi-Threaded, PersistentThread (single kept-alive thread w/ generic context & delegate)’ Started… Completed.

Time Elapsed : 00:00:11.3376303 s

Test ‘Multi-Threaded, ParameterizedThreadStart’ Started… Completed.

Time Elapsed : 00:00:11.4678206 s

Test ‘Single-Threaded’ Started… Completed.

Time Elapsed : 00:00:22.3374592 s

Seeing as the iterative version takes ~22.33 seconds to execute, the theoretical minimum time it could take on my Core 2 Duo is ~11.17s. And the new PersistentThread takes only ~0.16s less than that!

Also notice that the former speed champion, the base-line ParameterizedThreadStart method, is now slightly slower than the new PersistentThread. Honestly, I don’t think one can do any better with just two threads.

Task Parallel Library

The fancy lads at Microsoft have been working hard lately on bringing new ways of working with concurrent code. It was one of the big subjects on Channel9 this autumn.

So they released a CTP back in June of the Parallel Extensions for .NET, which features the Task Parallel Library and a front-end for using it with the Parallel static class and Parallel LINQ. I love it, and I can’t wait to use the official, final release in .NET 4.0. But for now, the CTP is an excellent reference point to see what kind of performance and ease of integration the TPL brings to your project.

The simplicity of my test codepath for the TPL boggles the mind, compared to all the other methods I’ve tested.

for (int i = 0; i < OuterLoops; i++)

Parallel.ForEach(testData, sample => { sample.Number = SlowFunctions.Bessel(sample.Number / rnd.Next()); });

The number of threads used, how the data is partitioned, and the actual thread/context handling is all abstracted to its simplest form. It really makes C# 3.0 lambda expressions shine.

So, it’s cute alright. But how does it perform? Actually, the TPL will allocate as many threads as it thinks would be beneficial, like an automatic thread pool, based on how many processors you have and the workload you give it. I’m not sure of the details, but that’s how I understood it. So I suspect that it uses more than two threads if doing so will give more processor time to your code; this is relevant when there are many other threads waiting for the processor, and more of these threads being your code = more chance of it being executed.

So here are the numbers :

Test ‘Task Parallel Library’ Started… Completed.

Time Elapsed : 00:00:11.4088743 s

It’s definitely fast, but not as fast as my new PersistentThread! But it’s so much more flexible, easy to use and scalable that it’s the obvious choice for concurrency in .NET,… when it’ll be officially released. :)

A final note on the Parallel Extensions, there’s a new class called ManualResetEventSlim in this CTP which suggests that it’s more optimized or leaner. I didn’t use it because it depends on libraries that cannot be shipped, but quick test showed that the performance was more or less the same as ManualResetEvent.

Vista > XP

Since the last post, I upgraded (though some might say downgraded…) to Windows Vista. The experience isn’t totally smooth since my laptop is being dumb, but one of the upsides of upgrading is having access to new kernel code that appears to be more efficient.

My last benchmarks had quite a margin between the different tests (generic context & delegate, class-local context, etc.). In Vista, it’s pretty much the same :

Test ‘Multi-Threaded, ParameterizedThreadStart’ Started… Completed.

Time Elapsed : 00:00:11.5178019 s

Test ‘Multi-Threaded, Class-Local Context’ Started… Completed.

Time Elapsed : 00:00:11.4912111 s

Test ‘Multi-Threaded, Generic Context & Delegate’ Started… Completed.

Time Elapsed : 00:00:11.4255807 s

Those are really insignificant differences. I suspect that either calling delegates, creating objects, or just casting objects has become faster in Vista. It’s really hard to tell, but the good news is that you can really choose whatever approach you like best : they’re all equally as fast.

Two threads per core?

A final test I wanted to share is to double the workload on an Idle processor and see what happens. So instead of creating a single PersistentThread, I created 3, which means 4 active threads including the current one. The data is split in four equal parts, and…

Test ‘Multi-Threaded, 3x PersistentThread’ Started… Completed.

Time Elapsed : 00:00:12.3893090

Time Elapsed : 00:00:11.3681673

Time Elapsed : 00:00:15.0850932

Time Elapsed : 00:00:10.2940759

Time Elapsed : 00:00:16.0883727

… and I don’t know what to say. The results are ridiculously unstable, and the code is just confusing and ugly. I wouldn’t recommend it.

Also, I did consider using the .NET 2.0 ThreadPool static class, but it seemed a little confusing to use, especially if I want to join the threads after everyone’s done his work. I think I can wait for the TPL for a proper solution. ;)

Updated test project

Here’s the updated project, now you’ll need the Parallel Extensions CTP for it to compile!

LoopParallelismUpdate.zip (150kb), Visual Studio 2008 Solution for C# 3.5.