So I started toying around (read: developing full-time) with Xbox programming using XNA GS 3.0. In fact I took a big Windows Game project with many satellite Game Libraries, a Content Pipeline Extension, a content editor, an automatic serialization library… and “ported” most of it to Xbox. But since the editor will remain Windows-based, the engine and most of the code needs to stay cross-platform, compatible with both Windows and Xbox.

And I hit a few walls.

I feel like these are things many people working with XNA will encounter. XNA’s been around for a while now, since version 1.0. Many of these things are already widely discussed in the blogosphere and forums. Also, I’m aware that GS 3.1 is around the corner and it’ll address at least the first point of my rant…

Still, here’s a handful of things that surprised or annoyed me in the transition :

1. ContentTypeReaders need to stay out of Content Pipeline Extension project(s)

Suppose you have a pretty big project that has custom datatypes, and those datatypes are compiled to XNB files using custom ContentTypeWriters and then read back using ContentTypeReaders. You usually need a Content Pipeline Extension project for that, and this project would reference your Engine or whatever project owns the datatypes that you want to compile.

Before very recently, I never quite understood why all official samples had the Reader classes in the Game project, while the Processors, Writers and Importers were all in the Content Pipeline Extension project. Why decouple it like that, and why join them with a fully-qualified assembly string in the GetRuntimeReader method of Writer classes? Moreover, putting Readers in my Extension project always worked in my Windows-only solutions, and it all felt nice and clean.

But when doing everything for Xbox and Windows, the reason becomes clear…

The Content Pipeline Extension project is a standard C# project in XNA GS 3.0. Not a “Game Library” project or any other special container. This means that it won’t be duplicated if you do an Xbox version, and it makes sense; you only need to compile content on your Windows machine.

So your Content Pipeline Extension needs to have a reference to your content datatypes, in some Game Library project. Since the Extension is for Windows only, it’d reference the Windows version of that Game Library. And then if your Readers are in the Extension, your Xbox game needs to reference it to load assets… which means the Xbox and Windows versions of your data structure Library project would coexist on the Xbox. This can’t work!

Besides, the Xbox project doesn’t need to access Processors, Importers and Writers. All it needs to be able to read content and then use it. These other content pipeline classes may even use Windows-specific assemblies like GDI+, why not? They’re certainly useful for image processing.

So bottom line, keep Readers in your Game project if it’s a small project, or in a Game Library for both Xbox and Windows. And if your Content Pipeline can reference this Readers-container, then no need for a hardcoded String for GetRuntimeReader, you can just get the assembly-qualified-name from Reflection classes!

2. The Xbox doesn’t like garbage

The first thing I noticed after I got the game running were hiccups in the framerate, every two seconds or so. But the framerate apart from that was a constant 60. I half-expected this,… it’s the garbage collection.

This paper by three people at the FZI Research Center for Information Technology explains it much better than I can, so I’ll just quote them… :

The .NET Framework on PC uses a generational approach, making garbage collections less painful and more resilient to large numbers of objects. With 512 MiB of GDDR3 memory shared between GPU and CPU the Xbox 360 garbage collector can’t afford such luxury.

The garbage collection of the .NET Compact Framework for the Xbox 360 always has to check all objects. Therefore the time a collection takes increases linearly with the number of objects. Further a collection will be triggered whenever 1 MiB of memory has been allocated.

This means you really need to stop carelessly allocating to the heap when doing an Xbox game. There are several “known causes” of heap garbage with the XNA Framework, but it’s easy to start going on a witch-hunt and replacing all foreach(in) statements by plain for(;;) or stuff like that… It’s a much better idea to find out what are the bottlenecks in your application and fix them starting by the bigger ones. You’ll probably end up solving most of the jittering without making your code look like C.

The above paper presents some options for memory profiling like the CLR Profiler and XNA Framework Remote Performance Monitor, both of which I have yet to try, but sounds like excellent free tools to address this issue.

Update : See this post for more information on typical causes of heap garbage.

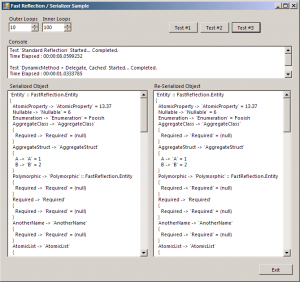

3. The Compact .NET Framework needs your help

The Xbox .NET implementation is not the full-blown framework, it’s based on a subset called the Compact Framework, which is also used on mobile devices and embedded systems. This comes at a small cost : you have to complete it to fit your needs.

It’s actually pretty cool that LINQ is supported and all 3.0 features work flawlessly. But here’s a short list (off the top of my head) of things I found missing, some important, some easily worked around, all of them at least mildly annoying… :

- Enum.GetValues(), Enum.GetNames(), Enum.GetName() : These are all missing from the CF. There is an old thread on the XNA forums that proposes alternatives that use Reflection. I found them to be working great.

- ParameterizedThreadStart : You can’t start a Thread with a context object in the CF. You then need a shared context object in the parent class.

- Type.GetInterface(string, [bool]) : You can’t query the interface of a type via reflection in the CF… at least not a single one by name. The GetInterfaces() method is supported, so might as well just use that.

- Math.Log(double, double) : Actually, there is a Log(double) function, but it’s with the natural base. The custom-base one is not supported. Seriously? (and I know, the workaround is a one-liner)

- HashSet : I love the .NET 3.5 HashSet generic class. It’s really complete, super fast… but the CF doesn’t have it. I ended up faking one with a Dictionary as a backing collection, and rewriting the set operators (UnionWith, IntersectWith, etc.) that I really used.

4. You’ll pretty much need a Content project

I don’t like the fact that in a standard XNA project, the content is compiled at build-time, in the Visual Studio IDE. For many reasons… one of them being that I’m not the one producing the content, the artist does, and he certainly doesn’t want to have Visual Studio installed. Another one being that my Content Processors are super heavy and VS sometimes crashes with a OutOfMemoryError before the build completes.

So what I did is write a content compiling tool that uses the MSBuild API to generate something like the .contentproj (yet simpler) based on the filesystem automatically, and compile it externally without needing Visual Studio. This works really great for Windows, we’ve been using it for months now.

But for Xbox… the deployment process is also tied to Visual Studio. And I’m not expert enough at MSBuild technologies to be able to replicate deployment outside of it. So I ended up making a content project only for the Xbox version of my Game project, and compile it separately when I need to test on XNA Game Studio Connect. This works OK, but I’m still a little unhappy about this whole Visual Studio dependency. I hope they look into it properly in the future.

So I was under the impression that you needed to have a content project for Xbox deployment, but Leaf (first comment) pointed out two ways of handling content compilation outside of Visual Studio. I’m definitely going to use the first one!

And…

These are the big points for now. Stuff I thought about adding : RenderTargets act different on Xbox and PC (but Shawn Hargreaves already blogged extensively on the subject and it’s way better than it used to be), Edit-And-Continue is not supported when debugging on the Xbox (but that would’ve been asking for the moon!), you get different warnings for shader compilation when targeting the Xbox360 platform so you should pay attention to that, etc. etc.

I’m still very much halfway through the conversion process, and I’m still learning, and still discovering oddities. If you have advice or corrections, please let me know through comments! On my part I’ll keep this post updated if I hit another big wall.