I’ve decided to repost all my remaining TV3D 6.5 samples to this blog (until I get bored). These are not new, but they were only downloadable from the TV3D forums until now!

This demo (originally released July 1st 2008 here) is a mixed bag of many techniques I wanted to demonstrate at once. It contains (all of which are described below) :

- Pixel-perfect sampling of mainbuffer rendersurfaces for post-processing

- A component/service system very similar to XNA’s and with support for IoC service injection

- A minimally invasive, memory-conserving workflow for post-processing shaders

- Many realtime surface downsampling methods (blit, blit 2x, tent, sinc+kaiser and bicubic)

- An optimized Gaussian blur shader with up to 17 effective taps in a single pass

Download

FullscreenShaders_(final).zip [8.6 Mb] – C#3 (VS.NET 2008, TV3D 6.5 Prerelease .NET DLL Required)

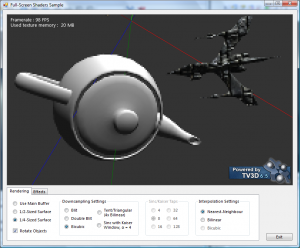

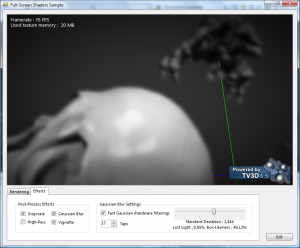

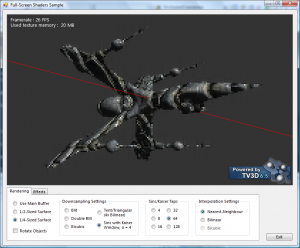

Screenshots

Dissection

Pixel-Perfect Sampling

There is a well-known oddity in all DirectX versions (I think I’ve read somewhere that DX11 fixes it… amazing!) that when drawing a fullscreen textured quad, the texture coordinates need to be shifted by a half-texel. So that’s why if you use hardware filtering (which is typically enabled by default), all your fullscreen quads are slightly blurred.

But the half texel offset is related to the texel size of the texture you are sampling, as well as the viewport to which you are rendering! And it gets seriously wierd when you’re downsampling (sampling a texture at a lower frequency than its resolution), or when you’re sampling different-sized textures in a single render pass…

So this demo adresses the simple cases. I very recently found a more proper way to address this problem in a 2003 post from Simon Brown. I tested it and it works, but I don’t feel like updating the demo. ^_^

Component System

The component system was re-used in Trouble In Euclidea and Super HYPERCUBE, and I’m currently using it to prototype a culling system that uses hardware occlusion queries efficiently. The version bundled with this demo is slightly out of date, but very functional.

I blogged about the service injection idea a long time ago, if you want to read up on it.

Post-processing that just sits on top

This is how I consider post-processing should be done : using the main buffer directly without writing to a rendersurface to begin with. This way, the natural rendering flow is not disturbed, and post-processing effects are just plugged after the rendering is done.

If you can work directly with the main buffer (no downscaling beforehand), you can grab the mainbuffer onto a temporary rendersurface using BltFromMainBuffer() after all draw calls are performed, and call Draw_FullscreenQuadWithShader() using the temporary rendersurface as a texture. The post-processed result is output right on the main-buffer.

Any number of effects can be chained this way… blit, draw, blit, draw. Since the RS usage only lasts until the fullscreen draw call, you can re-use the same RS over and over again.

If you want to scale the main buffer down before applying your effect (e.g. for performance reasons, or to widen the effect of a blur), then you’ll need to work “one frame late”. I described how this works in this TV3D forum post.

Downsampling Techniques

The techniques I implemented for downsampling are the following :

- Blit : Simply uses BltFromRenderSurface onto a half- or quarter-sized rendersurface. A single-shot 4x downscale causes sampling issues because it ignores half of the source texels… but it’s fast!

- Double blit : 4x downscaling via two successive blits, each recieving surface being half as big as the source. It has less artifacts and is still reasonably fast.

- Bicubic : A more detail-conserving two-pass filter for downsampling that uses 4 linear taps for 2x downsampling, or 8 linear taps for 4x. Works great in 2x, but I’m not sure if the result is accurate in 4x. (reference document, parts of it like the bits about interpolation/upsampling are BS but it worked to an extent)

- Tent/Triangular/Bilinear 4x : I wasn’t sure of its exact name because it’s shaped in 2D like a tent, in 1D like a triangle, and it’s exactly the same as bilinear filtering… It’s accurate but detail-murdering 4x downsampling. Theoretically, it should produce the same result as a “double blit”, but the tent is a lot more stable, which shows that BlitFromRenderSurface has sampling problems.

- Sinc with Kaiser window : A silly and time-consuming experiment that pretty much failed. I found out about this filter in a technical column by the Jonathan Blow (of Braid fame), which mentioned that it is the perfect low-pass filter and should be the most detail-conserving downsampling filter. There are very convincing experimental results in part 2 as well, so I gave it a shot. I get a lot of rippling artifacts, it’s way too slow for realtime, but it’s been fun to try out. (reference document)

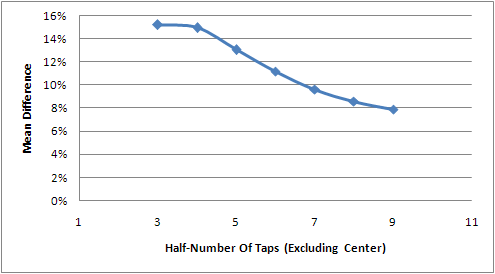

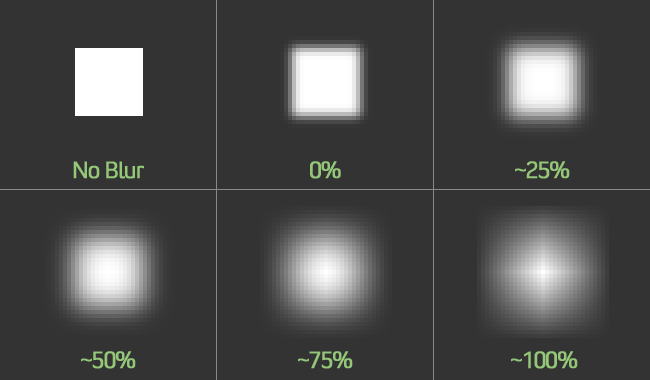

Fast Gaussian Blur + Metrics

The gaussian blur shader (and its accompanying classes) in this demo an implementation of the stuff I blogged about some months ago : the link between “lost light” in the weights calculation and how similar to a box-filter it becomes. You can change the kernel size dynamically and it’ll tell you how box-similar it is. The calculation for this box-similarity factor is still very arbitrary and you should take it with a grain of salt… but it’s a metric, an indicator.

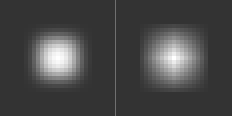

But there’s something else : hardware filtering! I read about this technique in a GLSL Bloom tutorial by Philip Rideout, and it allows up to 17 effective horizontal and vertical samples in a ps_2_0 (SM2 compatible) pixel shader… resulting in 289 effective samples and a very wide blur! It speeds up 7-tap and 9-tap filters nicely too, by reducing the number of actual samples and instructions.

Phillip’s tutorial contains all the details, but the idea is to sample in-between taps using interpolated weights and achieve the same visual effect even if the sample count is halved. Very ingenious!

If you have more questions, I’ll be glad to answer them in comments.

Otherwise I’ll direct you to the original TV3D forum post for info on its development… there’s a link to a simpler earlier version of the demo there, too.