An update to this post is available here.

Recently I was coding some physics-enabled particle systems and ended up with some fairly CPU-intensive stuff that looped as times as there are active particles in a system, and as many as there are active particle systems, each update cycle.

And it got choppy.

I don’t like choppy.

So I had three choices :

- Offload to the GPU using GPGPU or Vertex Textures, but doing that in XNA with Shader Model 2 support is… time-consuming. Especially when you have no background work on the subject.

- Profile and optimize my physics code, or switch to a physics package like Havok or Farseer, but I expect that would also take too much time.

- Multithread using loop parallelism to use 100% of my CPU power instead of 50%! (I have a Core 2 Duo processor, and there’s more and more mainstream PCs with dual-, quad- and even octo-cores)

I ended up doing the latter because it was the simplest, and multithreading is rarely ever simple. But in some specific cases, it’s not much of a headache, really.

If your looped operation does not change the context of further loops, that is if every loop can be done in any order and individually without any write locking, it takes like 30 minutes to get it to work in C#, and with no concurrency problems. It’s like offloading static Web content to a different server, just a matter of routing to the right hardware.

Annoyances and interrogations

- ParameterizedThreadStart is not generic. If you have to pass a context to your threaded operation, you have to cast it back to what it really is, at each iteration. It’s unnecessary and annoying… is it slow?

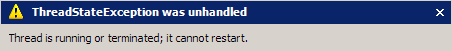

- Once a thread dies, you can’t resurrect it. Correct me if I’m wrong, but FSM knows I’ve tried, and a thread that finished its execution cannot be re-run, even if its thread-start method is the same. You have to instantiate another. Does that affect performance…?

The problem with thread creation is that I create a lot of temporary threads. They live a single update cycle, and I instantiate one per particle system per update cycle, at about 60 cycles per second. It sounds like it could slow things down.

So I made a test!

Benchmark

The (fictional) situation is the following : in the middle of a game loop, you have to evaluate the 0th Order Modified Bessel Function Of The First Kind for some reason. And you need to do that for a large dataset, say 500 times per update cycle.

Actually, this kinda makes sense if you’re calculating weights for a very wide Kaiser filter, which I will probably cover later. I had code laying around, which is why I used the elegantly-named Bessel function.

So, this is slow, because it’s an integral. And so multi-threading would be beneficial. The different strategies are :

- Single-Threading; it’s always good to know how slow it went before all optimization… if only for programmer ego.

- Multi-Threading, using the ParameterizedThreadStart delegate, which means we’ll have to cast the Object to our real context type.

- Multi-Threading, using a class-local, strongly-typed context and the (parameterless) ThreadStart delegate. This way we avoid the cast, and sacrifice a weird variable in the host class’ header.

- Multi-Threading, using a generic Thread wrapper that does the strongly-typed context caching instead of laying it around. It’s basically a proxy for Thread with the context variable.

- Multi-Threading, using a single “kept-alive” Thread that is started once for all update cycles, and that is forced into an artificial idle state between update cycles.

I hoped, even half-expected that these solutions would go from slowest to fastest. The last one was particularly interesting because it skipped all the thread instantiations and instead, relies on a Monitor and a sync-lock object to “wait” between two update cycles. It also produces much less garbage.

Results

Here’s the result for 500 update cycles, in which I update a 500-sized data-set, in Release mode, out of the IDE so without any debugging, and as little stuff as possible running in the background. I used a Stopwatch object to calculate these timings.

Test ‘Single-Threaded’ Started… Completed.

Time Elapsed : 27.8970399 sTest ‘Multi-Threaded, ParameterizedThreadStart’ Started… Completed.

Time Elapsed : 18.1804179 sTest ‘Multi-Threaded, Class-Local Context’ Started… Completed.

Time Elapsed : 19.5161083 sTest ‘Multi-Threaded, Generic Context’ Started… Completed.

Time Elapsed : 20.2257278 sTest ‘Multi-Threaded, Kept-Alive Thread’ Started… Completed.

Time Elapsed : 23.7845815 s

Of course, everything goes differently from what I expected. :P

The good news is, thread creation in .NET is very fast. No need to worry about that anymore. In fact, trying to circumvent that using a single kept-alive thread and monitor use makes it go almost 30% slower!

Also, casting the object context every iteration is faster than any other strongly-typed alternative. Unless the word “Object” in your code makes you want to shoot someone, it’s the best way to go. Kind of sad, that.

Here’s the C#3.0 (VS.NET 2008) test project : Loop Threading Performance (24 Kb)

Sorry again for the absolute absence of comments, it’s late and I don’t feel like it. D:

A Note On Debugging

One thing that’s slightly alarming and certainly worth mentioning, is that the performance in the IDE (with Debugging, but not necessarily in “Debug” mode) is hugely different.

In debugging, the “kept-alive” thread is almost always faster than all other strategies, by as much as 15%. This never replicates in the real-world. So kids, always test out of the IDE, or add the empty green arrow (Start Without Debugging, Ctrl+F5) in your Visual Studio toolbar!