In March 2018, I attended Train Jam and chatted there with Rami Ismail about a project he was starting up : contemplative, 5-minute games released day-by-day. I thought it was neat, but I didn’t commit to anything since my time for side-projects is super limited.

In late November 2018, I was asked by Jupiter Hadley whether I’d like to contribute to a super secret project she and others were working on… I asked about details, and realized it’s the same thing Rami pitched me months earlier : Meditations! Jupiter explained that I should spend no more than 6 hours on the project, and submit it before December 14th 2018. The timing worked out, so I decided to give it a go.

I picked April 9th as the date (it was moved back 1 day to April 8th after the fact), and started browsing my Google Photos to see what the heck happened on an April 9th in my life… 2013 jumped out as a memorable one. My then-girlfriend (now wife) MC and I went to Nara, Japan where we met bowing deer and strolled the city on a beautiful sunny day. The cherry trees were at the peak of their flowering time, and they shed pink petals everywhere.

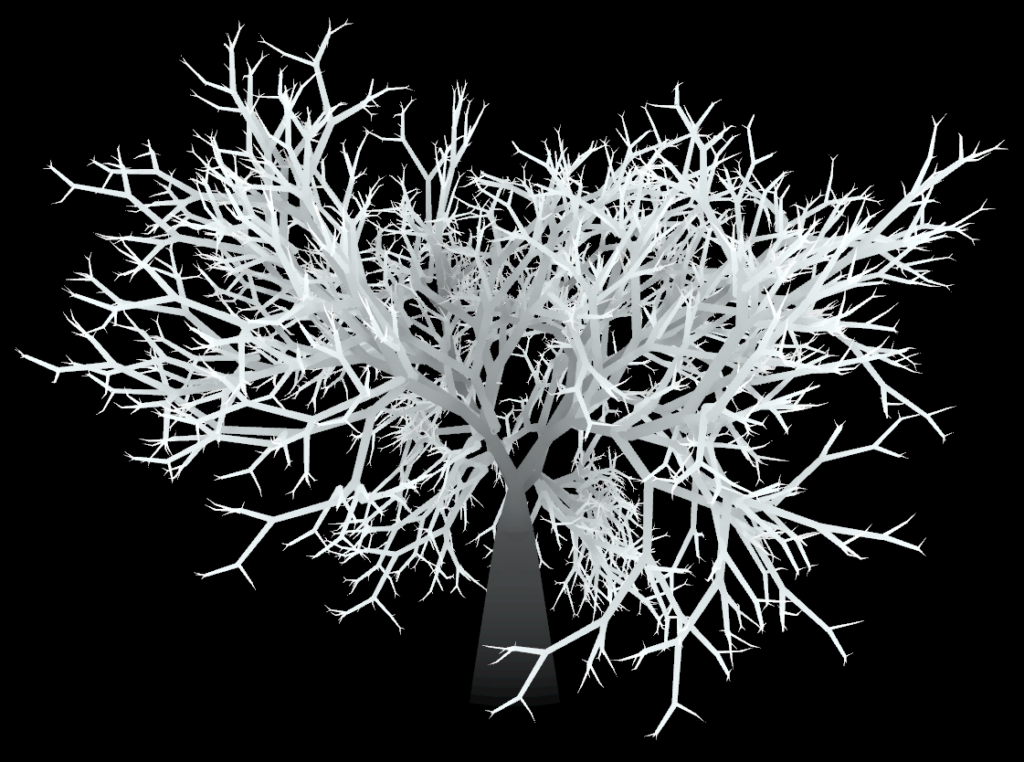

So I figured I’d do a little vignette game with a tree, falling petals, and heavy emphasis on the soundscape. I wanted it to feel vaguely like Proteus, with a Boards of Canada kind of musical vibe. Other stylistic references I had in mind : Devine Lu Linvega‘s Kanikule, and @ktch0‘s Places.

I asked my friend and colleague Rodrigo Rubilar whether he’d like to do sound design and music. I originally planned to do it myself with my limited audio gear, but I was excited to work with him on a small project like this, and he’d produce quality results 10 times faster than I could! We jammed an afternoon on an ambient track with a few layers, and one-shot sounds for the leaves hitting the ground.

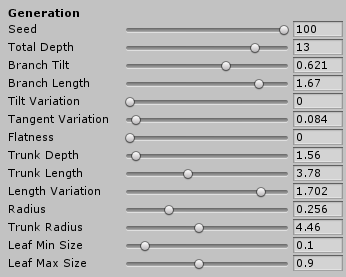

At the same time I started hacking at a fractal tree generator in Unity. It’s actually the first time I’d tried to write one, so it was interesting to see how the simplest algorithm could apply to 3D, with some randomness injected into it. I exposed a ton of parameters, and played with them until I was happy with the result.

Then I started thinking about the petals, and how I wanted them to move in the air. I started with a basic Unity particle system and wind zones, but I suck at authoring those and I could never get the look I wanted. So I resorted to manually updating the petals with simple physics. But then I wanted a lot of petals… thousands of them. So I started toying around with Unity 2018’s job system.

Optimization ended up being the most interesting engineering aspect of the project for me, and the biggest time-sink. There is no way I respected the development time limit of 6 hours (probably spent more than 40 hours on it), but it was hard to let go. So I’d like to at least document what I ended up doing and why.

If you just want to look at the code, it’s all here.

Jobified update

I ended up with a single job that does most petal-related work :

- Update physics based on wind parameters

- Detect ground collisions

- Detect player interactions (you can rise petals from off the ground by walking near them)

I want to update all the active petals every frame, so I schedule the job in the earliest Update callback (you can tweak that using Script Execution Order), and complete it in LateUpdate.

I wanted petals hitting the ground to trigger sounds, so the naive way of making this happen in Unity (and my initial approach) is to have one GameObject per petal, with an AudioSource component. The AudioSources don’t have to be enabled all the time, only when they’re playing a sound, but that does mean that I have to update their Transform so that the sound plays at the right 3D position.

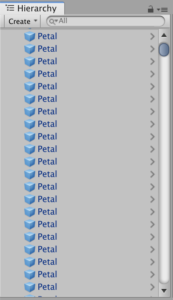

It’s possible to access and update Transforms from within a job by implementing IJobParallelForTransform, and pass the transforms to the job using a TransformAccessArray. A major gotcha with the current version of the job system is that only root Transforms will be assigned to different threads. I originally had petals as child of an empty game object just for grouping, but then my job was using a single worker thread. Reading this forum post explained it, but it’s still baffling to me, and makes for a pretty hideous Hierarchy tab.

I ended up not using Transforms for petals at all, but more on this later.

One thing that I found interesting is how one returns data from the job to the game code. For instance, I want to know which petals should trigger a sound by returning a list of petal indices that touched the ground. You can declare a field in the job as NativeQueue<int>.Concurrent (which is part of the Collections package) , and enqueue data as the job runs on multiple threads. From the calling site, you need a NativeQueue<int> to be created, but when it’s passed to the job instance, you need to use the .ToConcurrent() method to make it usable. Then, once the job is complete, you can use .TryDequeue() to extract elements from it. Works great!

I enabled Burst on my job very naively, and spent zero time optimizing the generated code. I did take care of the basics : make sure to mark job fields appropriately as [ReadOnly] and [WriteOnly], use the SIMD-capable Unity.Mathematics package whenever possible. In the end, the job runs way faster than I need, so I just moved on.

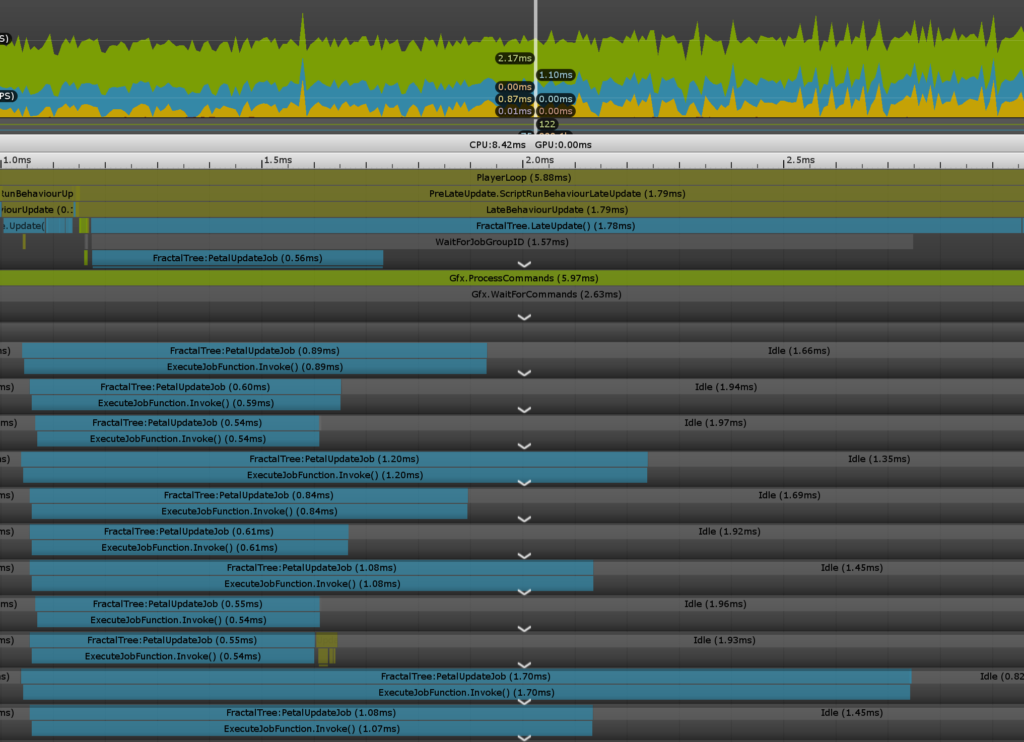

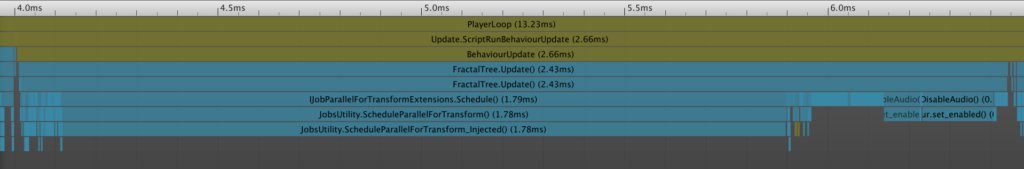

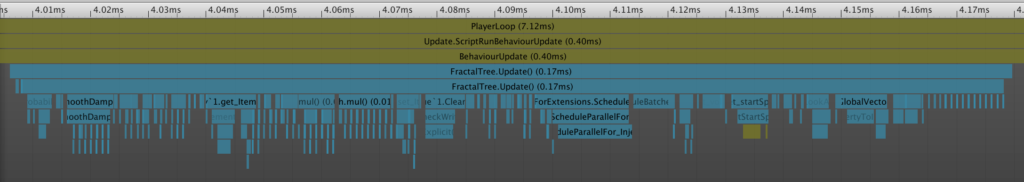

Burst had a dramatic effect on the job’s performance. Here’s a profile of the same code running on the same system, with Burst compilation toggled off and on. The biggest noticeable difference is that LateUpdate does not need to wait on jobs at all when they are Burst-enabled, which means it runs fully in parallel!

Rendering

Note : even though I’m using Unity 2018.3, I did not use the Scriptable Render Pipeline in this project, so the following probably only applies for the legacy rendering pipeline.

I initially had every petal GameObject with a MeshRenderer, a simple quad mesh, and the Unity Standard Shader with instancing turned on. It definitely worked, but Unity spent a lot of time in culling and batching, and I thought I could do better… by short-circuiting it completely.

It might sound like rendering everything all the time is a bad call, but in this game, the worst-case scenario of all petals being visible at once is the very first thing you see. Might as well make it a fast general case!

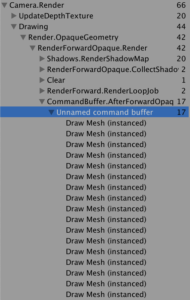

One way to draw objects manually is to use Command Buffers. By specifying a mesh, a material and a bunch of transformation matrices, you can use the DrawMeshInstanced() method to draw up to 1022 (that number I got empirically, but it appears to be undocumented) instances at once, in a single call. There is no renderer on the game objects themselves, so Unity acts as if they don’t exist, apart from issuing draw commands.

My petal update job’s final step is then to bake the petal’s current position, rotation and scale into a 4×4 transformation matrix, so that I can use them in my command buffers later. But there it gets annoying :

DrawMeshInstanced()takes aMatrix4x4[], nothing else, so we’ll have to copy ourNativeArrayto a managed array.- We have to respect the batch size of 1022, so we have to use

NativeSliceto take 1022-element slices of ourNativeArray. NativeSlice.CopyTo()copies element by element, and it’s very slow.

I found a faster CopyTo implementation on the Unity forums which I slightly modified, and it does perform much better, but it’s a lot of moving data around for no reason. I wish that NativeSlices were directly usable with command buffers.

I end up with two very similar command buffers : one for depth rendering inside shadow-maps and for the camera’s depth pass, and one for regular opaque rendering.

The depth rendering command buffer uses the ShadowCaster pass from the Standard shader, and hooks to the AfterShadowMapPass event on lights as well as the AfterDepthTexture event on the camera. I needed the camera depth pass for SSAO using Keijiro Takahashi’s MiniEngineAO Unity implementation.

Triggering audio

As I hinted to earlier, I ended up ditching GameObjects for petals entirely. The only reason I originally kept them around (and updated at all times) was for audio, but it’s a lot of data shuffling just for sporadic sound triggers, so I opted for a simple audio trigger object pool instead.

I pre-initialize 1500 (again, empirical) dummy GameObjects with a disabled AudioSource component that lay dormant in the pool until an audio event happens. At that point, I take one from the pool, enable audio, position it at where the impact happens, and play the audio clip. There is no update cost to having them around otherwise, and they are disabled & returned to the pool when the sound playback is complete.

Petals are then strictly represented by a data structure that lives in a NativeArray, not by a GameObject. And the update job becomes a IJobParallelFor, mutating elements of that array. Neat!

You may wonder how dramatic a performance difference this makes. So here’s a comparison, taken from the same Macbook, of the CPU time spent only scheduling jobs between the version using Transforms and the version without. Something about how Unity handles transform access to a job definitely incurs sizeable overhead.

Transforms (1.79ms spent scheduling jobs)

Transforms (0.03ms spent scheduling jobs)Bending the tree

When I had the petals mostly working well, it still felt strange that the tree didn’t animate at all. I wanted branches to sway in the wind, and I figured I could make that happen easily in its vertex shader.

It turned out to be a lot trickier than I expected, for a few reasons :

- The tree is generated as a single baked mesh, there is (ironically) no tree structure to it. No great reason for this apart from lack of foresight.

- The petals aren’t really attached to branches, their position is just set to the endpoint of branches when generating the tree.

To make the smaller branches bend but not the trunk, I set a bendability parameter during generation (how far away from the trunk that vertex is) in the texture coordinates.

I then define the a bend axis perpendicular to wind direction, a bend angle dependent on wind force, and a shake factor which is just a bunch of low-frequency and high-frequency sine functions multiplied together, to make it feel a bit more noisy & organic.

These variables are mapped to shader globals, and I used keijiro’s angle-axis to float3x3 HLSL function to apply this in the vertex shader of my tree. So far so good!

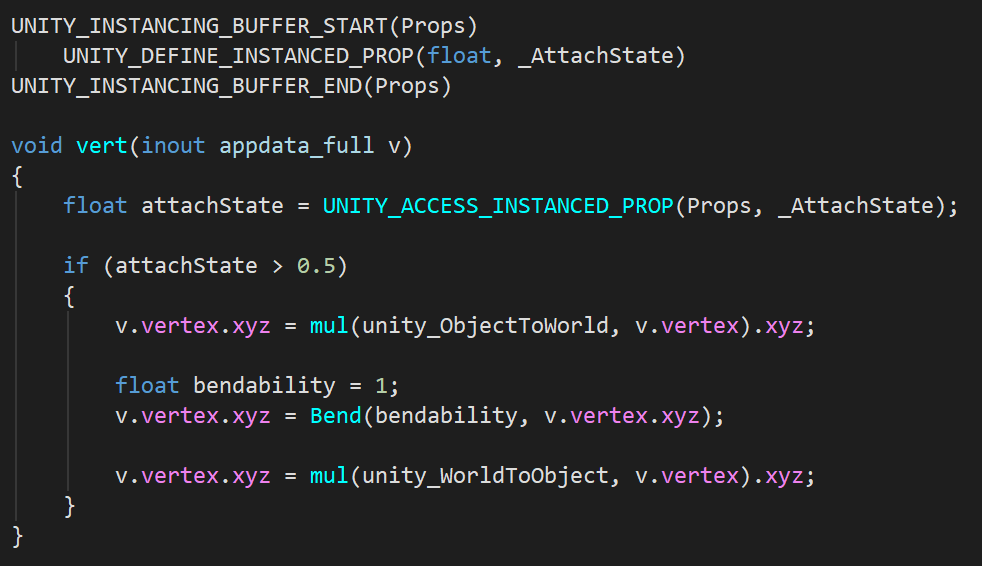

Where it got tricky is for petals. I need to differentiate when they’re attached or detached, so I can apply the wind bending selectively. I ended up using a MaterialPropertyBlock to have per-instance data (a single float) encoding this state.

In the vertex shader (which is part of a simple Surface Shader), it took me a minute to understand how to do world-space transformations on vertex data. The simplest way I found was to transform the vertex to world-space, and take it back in object-space when I’m done. Not super efficient… ¯\_(ツ)_/¯

On the application-side, I did use a NativeArray<float> for instance data, but I’m not sure it’s the easiest way to go about it. I don’t actually read from or write to it from within my update job, it’s all happening in the regular Update(). It allowed me, however, to re-use Slice() and CopyToFast() to make 1022-sized chunks for rendering.

One thing to note : unlike with the draw arrays, MaterialPropertyBlocks cannot be reused for multiple batches, so I create all the ones I need up-front and iterate through them.

Another thing I realized, is that I need to apply the exact same function I used in the vertex shader to my petals the moment they are detached from the tree. This effectively bakes the tree’s bend at that moment in time into their transform, and avoids them visually warping around as they are detached.

In the end it still looks kinda stiff, but it’s better than the cement tree I started with.

Closing thoughts

I’m super happy with the final result. It’s relaxing, soft, yet sometimes surprisingly intense. It ended up feeling a lot more mournful than I expected, but I’m fine with that. I really enjoy the visual transition from a mess of pink sheets of paper to a menacing, pointy web.

But since everything was done in a rush, there’s some things I wish I’d done differently.

In Feburary 2019 I stumbled upon this rather excellent tree by Joe Russ, developer on Jenny LeClue :

It made me realize that my tree shape and animation could be so much better. Having the branch splits be less even, more jagged and well, tree-like, would add a lot. And by having the branches hierarchically laid out, I could have bent the sub-trees and produce a much more convincing wind swaying effect.

Spending so much time on performance optimization made me wonder if doing a whole forest of trees, instead of a single one, would be possible at all. Wouldn’t that be neat? But it brought additional headaches that I didn’t want to deal with.

But there’s something to be said about making a small thing, with a tiny scope, a reasonable amount of polish, and putting it out there. Just the fact that I was excited enough about making this that I convinced Rodrigo to tag along made it worthwhile.

So big thanks to Rami, Jupiter and everyone involved in the Meditations project for making this happen. And thanks as always to my wife MC for humoring me while I spent precious evenings & weekends time endlessly debugging my stupid tree. ♡

Addendum : So what about ECS?

You might be wondering why I went straight with the Job System but didn’t use Entities, since they were showcased in the Megacity demo and Unity is really selling it as The Way to do massive amounts of objects with acceptable performance.

I don’t really have a good answer to this, except that I was short on time and was familiar with the idea of data parallelism, but not so much with ECS… and didn’t want to spend time learning how to use ECS properly. And I’m stubborn and will go down a path I’ve set for myself even if it’s a bad idea.

There’s good chances that using ECS is the best way to do what I was trying to achieve, but I didn’t go down that path yet. It would be interesting to revisit the project and do it that way; maybe I will!

I’m also curious to hear from people who have tried Unity’s ECS. Would it really make this easier to design? Would the rendering portion be simplified? Let me know!