Update May 15th 2016 : New v3.0 build on the GitHub project page with many fixes and additions.

Update August 26th 2010 : New v2.1 build on google code that fixes the “Invalid XML Characters” issue! Try that one if you had errors when fetching scrobbles.

Updated June 26th 2010 : This project is now hosted on Google Code! I’m new to this so bear with me, but I’ll try to make it nice so that people can contribute. (Google Code no longer exists and the project is now on GitHub)

Updated March 5th 2010 : You shouldn’t need to install both iTunes and WMP for it work, just the one you want to use! Finally.

Original March 15th 2009 post follows.

Downloads

Get the freshest releases (source or binaries) on the GitHub project page.

Description

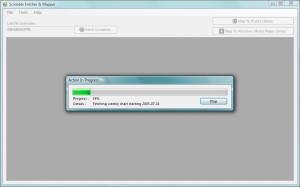

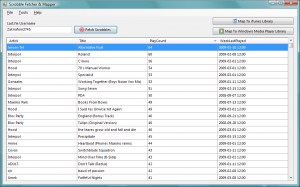

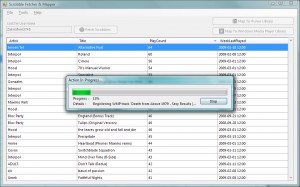

The Last.fm Scrobble Fetcher & Mapper does exactly that. It fetches all your scrobbles from your (or anyone’s, really) Last.fm account, assembles them in terms of Play Count and Date Last Played for each music track, and then exports this data to either Windows Media Player 11 or iTunes.

I’ve been working on this application on and off for some time now and I think it’s ready for deployment. I built it because I have quite an extensive music library, I like my playlists smart and automatic, and I have poor luck with music players, hardware failure and vendor lock-in.

It seems that the “Play Count” and “Date Last Played” metadata fields are not part of a file’s ID3 tags, but rather is stored in the player’s local library. This is usually fine, and probably more efficient than writing to files all the time, but it means that if your player’s database gets corrupted (as happened to me with Windows Media Player) or that you decide/are forced to use another player such as iTunes because your iPhone doesn’t want to sync with anything else, then you’re screwed. And same thing of course if you reinstall your machine or get a new one.

I feel that this metadata is important because I like to have automatic “best-of” playlists that are based upon it, and sometimes it’s nice to listen to a comfortable, time-proven playlist.

Thankfully, if like me you’ve been using the Last.fm services since they were called Audioscrobbler, you’ve gathered an impressive amount of playback information in your account over the years. And using their web services, and my application, now you can take it back home!

Technology

I wanted this application to be my cleanest, most error-tolerant and most efficient piece of work yet in application design. I also tried to exploit all C# 3.5 features, having accumulated some months of experience with LINQ, lambda expressions, etc.

Here’s a rundown of its tech features :

- Multi-threaded

- Uses the Parallel Extensions for .NET June 2008 CTP and a little home-made framework for progress-reporting asynchronous foreground tasks.

- Most operations will use multiple cores thanks to the Task Parallel Library’s capabilities, and will seldom lock because of its lockless concurrent data structures.

- WCF-enabled

- Uses the Windows Communication Foundation classes in .NET 3.5 to communicate with Last.fm’s RESTful API.

- All the response data objects are deserialized automatically from XML using the built-in XmlSerializer.

- Error tolerant

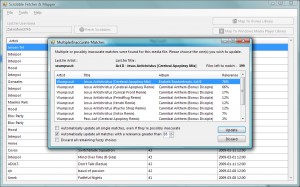

- Since Last.fm track data will not always match your library’s perfectly, I used the Levenshtein distance string metric and “neutralized” strings (removes all accentuated characters, symbols, whitespace, etc.) to get accurate matches.

- Will (should!) recover gracefully from errors, both caused by user input or unexpected conditions. It also allows graceful cancelation of long-running operations.

Screenshots

Closing notes

– This code uses a Community Technical Preview version of the Parallel Extensions for .NET, and one that is almost a year old… So it’s delivered as-is, and you cannot (and should not) use that library in commercial products. Although I have functionally tested the program pretty extensively, I cannot guarantee it’s not going to corrupt your library or overwrite some information. Use at your own risk!

– I noticed that iTunes checks if the file is writable before setting any metadata concerning it. So you should make your files writables unless you’ll get a ton of errors in the error log after the scrobble-mapping.

– You need to keep the iTunes instance running for the iTunes mapping to work. The COM interface requires it to be open, but you can minimize it to the tray.

– The code is released under the Creative Commons Attribution-Share Alike 2.5 license.

If you encounter anything unusual, if you have ideas for extensions to this program or have comments/questions about how it works, do ask! That said I will probably blog some more about parts of this program, like the WCF usage or the asynchronous task classes. Enjoy!