I cleaned up this entry because it’s still getting traffic and didn’t really make sense as it was. I am not working on this anymore, haven’t been for months.

Description

My goal with this sample is to make a fast, simple, easy-to-use and good-looking (in other words, utopic :P) shadow mapping implementation in TV3D 6.5 with a directional light. It should work on landscape and meshes, perhaps on actors too but I don’t know how to rewrite an actor shader (animation blending = ouch).

I have already released a VB.Net implementation of Landscape Shadow Mapping on the TV3D forums. I might rewrite it in C# and my current programming standards and release it on my blog, but for now I’m not satisfied with the code enough to give it publicity…

Implementation Details

There are currently two modes :

- The PCF (Percentage-Closer Filtering) implementation uses a R32F texture for the depth map, which is the fastest format for what’s needed. I hope this is renderable on ATi and ps_2_0 hardware, I’ll have to check on this.

I read on a couple of sites that on nVidia hardware, using D24X8/D24S8 textures gave free 4×4 PCF with bilinear filtering… but damnit, I can’t create a rendersurface with this mode! Sylvain said he’d look into this. - The VSM (Variance Shadow Maps) implementation uses a A16B16G16R16F (aka HDR_FLOAT_16) texture for the depth map, because it allows for filtering on my GeForce 7000 series hardware :)

I considered using G16R16F, which is faster and has a smaller memory footprint, but looks like TV3D won’t allow me to use it as a render target. G32R32F and A32B32G32R32F work and are more precise, but they don’t allow filtering; so it looks like unfiltered PCF, unless it’s post-bilinear-filtered (which I do support).

For the depth map rendering, I use two cameras : one for the actual viewport, and an orthogonal (aka isometric) one for the light. Its look-at vector is the same as light direction, and its zoom and position are calculated using bounding boxes vector projection. Then when rendering the depth map in the shader, I use the WORLDVIEWPROJECTION semantic, which gives me exactly what I want. I found that to be the cleanest way.

Comparison Screenshots

Now here is the promised ton of screenshots.

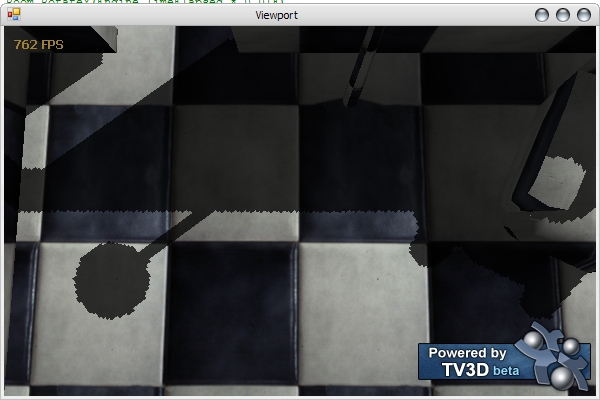

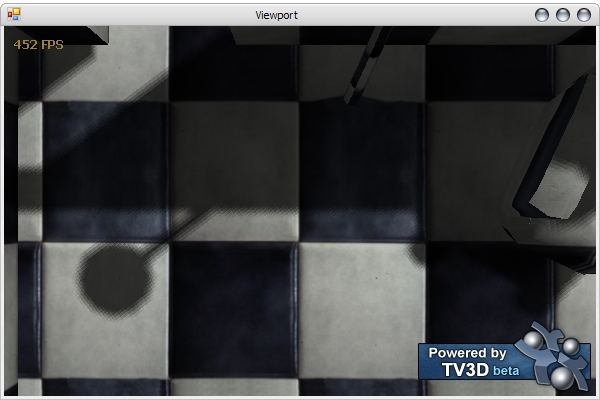

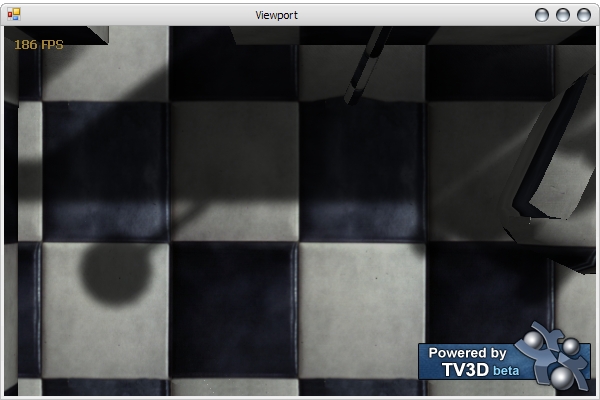

- No filtering

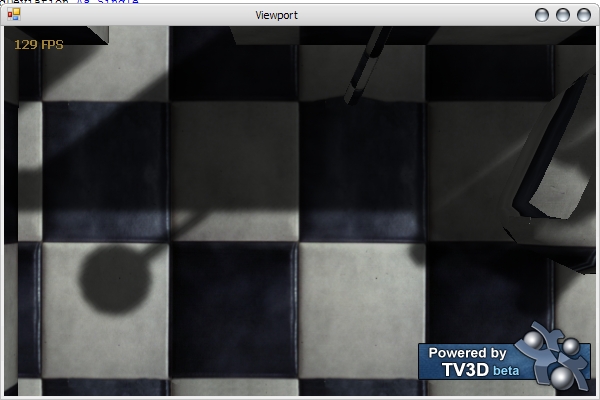

- No filtering (downsampled 4x)

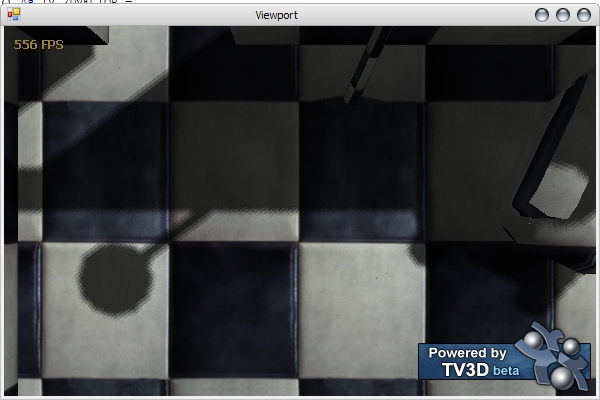

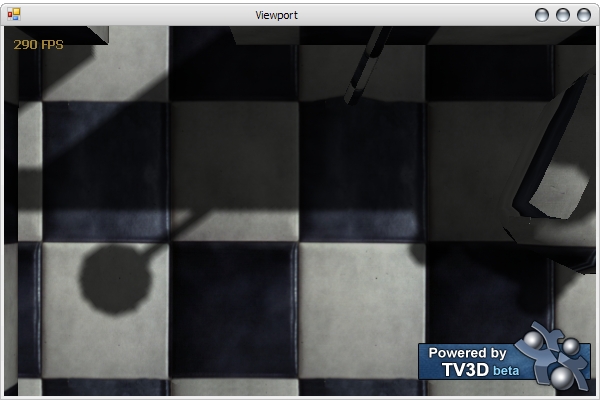

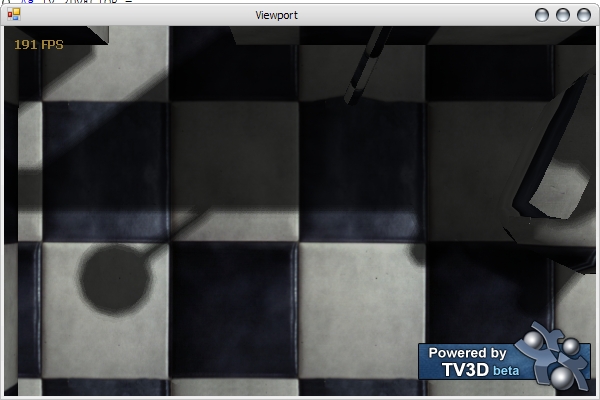

- 3×3 PCF (Percentage-Closer Filtering)

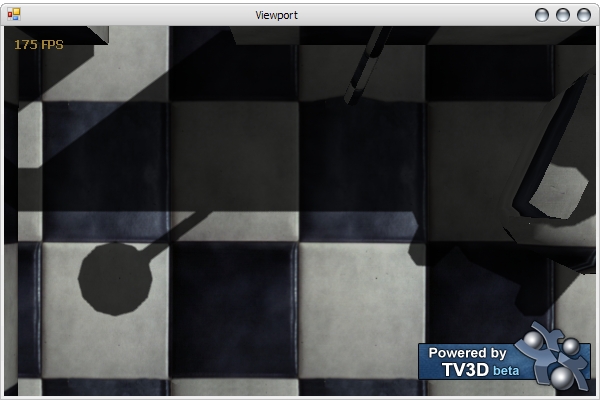

- 3×3 PCF with Jitter Sampling

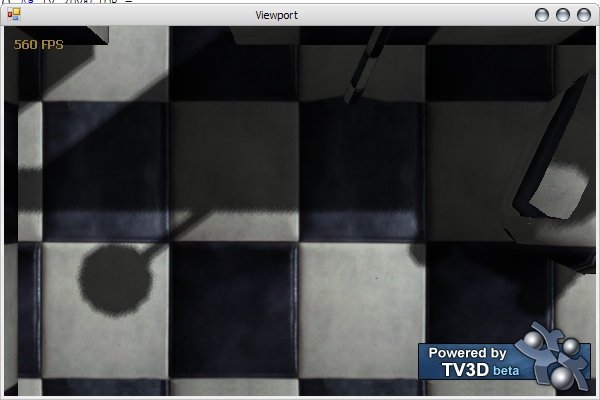

- 3×3 PCF with Bilinear Filtering

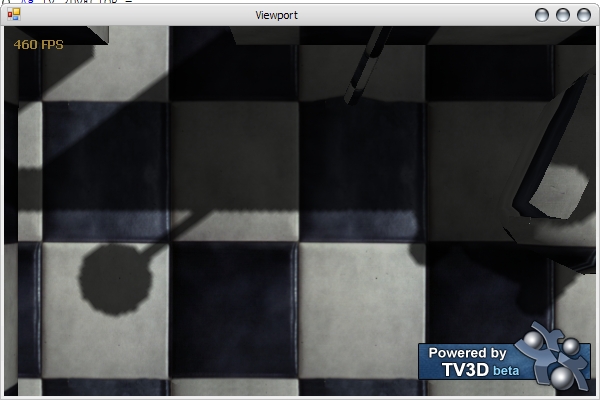

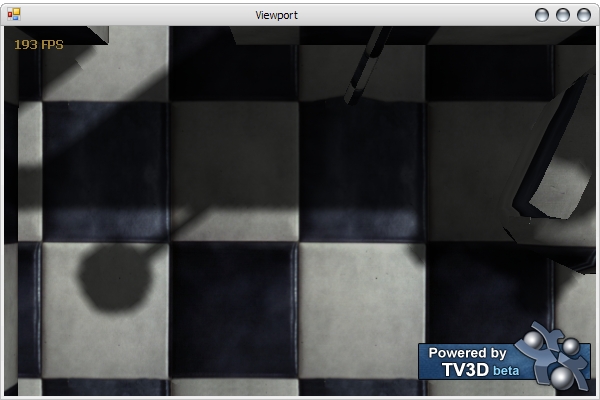

- 4×4 PCF

- 4×4 PCF with Jitter Sampling

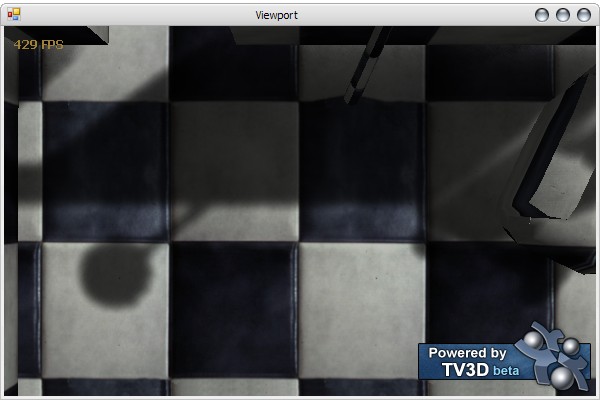

- 4×4 PCF with Bilinear Filtering

- 4×4 PCF with Bilinear Filtering (Downsampled 4x)

- 7×7 Gaussian Blurred 16-bit VSM (Variance Shadow Maps)

- 5×5 Gaussian Blurred 16-bit VSM (Downsampled 4x)

- 5×5 Gaussian Blurred 32-bit VSM (Downsampled 4x)

Notice the framerate, it’s a fairly good indicator of the performance of each technique for a very small frame buffer resolution. See the table below for a “real-world” performance evaluation.

About Downsampling, it looks like it has a pretty hard effect on the framerate, but the quality is comparable to a 4x resolution shadowmap. And, something that can’t be seen in the above screenshots, the framerate is much more constant when the viewport is larger. I’ll make a proper benchmark to show how those techniques compare in the real world.

A word on VSM, the last shot. It’s very fast, it’s smooth, but… it looks odd.

Notice the gradient, as if light was bleeding from underneath the wall. And the separation between the armchair shadow and the wall shadow! Those are some of the artifacts I was talking about. The main problem is precision; I’m using a 16-bit floating-point format because it gives free hardware filtering (up to anisotropic), but it also causes precision issues.

32-bit versus 16-bit might look identical, but there are other artifacts in the scene like on straight walls or on the floor that are present in the 16-bit version but not in the 32-bit version. The shadow also looks somewhat sharper.

Now about jitter sampling. After seeing that, one would say “Jitter sampling is useless! Bilinear filtering looks tons better.”

But jitter sampling has an advantage that bilinear does not have : it can be scaled. Here are some more shots.

- 4×4 PCF with Scaled Jitter Sampling, 4x resolution shadowmap

- 4×4 PCF with Bilinear Filtering, 4x resolution shadowmap

Here, bilinear filtering just looks like antialiasing. It looks kinda good, but the shadows are very hard. Scaled jitter sampling on the other hand, has very soft shadows! And they’re relatively faster, for the same shadowmap size.

Mesh Self-Shadowmapping Performance

I’ve decided to make a 1024×768 fullscreen benchmark of all good-looking techniques. Here are the results :

| Depth map size | Technique | Extensions | Framerate |

|---|---|---|---|

| 512×512 | PCF 4×4 | Bilinear Filtering | 111 fps |

| 1024×1024 | PCF 4×4 | Bilinear Filtering | 106 fps |

| 1024×1024 | PCF 4×4 | Downsampled 4x, Bilinear Filtering | 97 fps |

| 2048×2048 | PCF 4×4 | Jittered | 122 fps |

| 512×512 | VSM 5×5 | 16-bit, Hardware Filtered | 210 fps |

| 512×512 | VSM 5×5 | 32-bit, Bilinear Filtered | 117 fps |

| 1024×1024 | VSM 7×7 | 16-bit, Hardware Filtered | 75 fps |

| 1024×1024 | VSM 5×5 | Downsampled 4x, 16-bit, Hardware Filtered | 169 fps |

| 1024×1024 | VSM 5×5 | Downsampled 4x, 32-bit, Bilinear Filtered | 95 fps |

All in all, those are comparable techniques in terms of visual quality. What I take from these results :

- PCF is viewport size-dependant, not depth map size-dependant; so getting better visual quality by pumping up the depth map size is possible. On the other hand, downsampling to gain performance is futile, and doesn’t help the visual quality much either.

- Jittered sampling is very fast and works well with very high-resolution depth maps.

- VSM is very much depth map size-dependant, which means that it benifits alot from downsampling.

- 16-bit VSM on nVidia hardware is VERY fast, but when you go earlier than the 6000 series or on ATi hardware, you have to bilinear-filter it yourself… and it’s slow. Also, 16-bit has a lot of precision issues, I have to work on those…

- 32-bit VSM is slow, but usable when the depth map is kept pretty small (512×512). It also looks very good.

Landscape Shadowmapping Notes

Some remarks though about the choices I made for filtering in a multi-pass context :

- VSM is surprisingly slow in a multi-pass algorithm, so barely applicable to landscape shadowmapping. The precision artifacts just aren’t worth it.

- Unfiltered modes look surprisingly good because the shadowmap itself is hardware-filtered so it doesn’t need to be constructed smooth. That renders useless all the work I’ve put in making bilinear filtering possible at every level! I find 512×512 unfiltered to look great for “semi-hard shadows”.

- Jitter sampling looks great, has the advantage of being costless at high-resolution shadowmaps, and gets filtered — something that was impossible to do without multi-pass. It’s the clear winner here in the “soft shadows” category.

Bonus (jittered) teapot. :)

[quote]I considered using G16R16F, which is faster and has a smaller memory footprint, but my card can’t use it as a render target.[/quote]

Are you sure about that? The GPU programming guide is wrong, Geforce 6 & 7’s can render and filter D3DFMT_G16R16F. The caps viewer shows that they can and I’ve successfully used this format with VSM.