First part can be found here.

An implementation of the concepts presented in this series can be found here.

The Perfect Sigma

The last post’s closing note was, how are we to find the “perfect” standard deviation for a fixed number of taps such that exactly 0% of light is lost. This means that the sum of all taps is exactly equal to 1.

It’s absolutely possible, but to find this exact σ with algebra and possibly calculus was a bit over my head. So I just “binary-searched” across the decimals until my Excel grid told me that as long as double-precision goes (15 significant numbers), I have ≈ 1 as the sum. I ended up with the following numbers :

- 17-tap : 1.2086

- 15-tap : 1.1402108

- 13-tap : 1.067359295

- 11-tap : 0.9890249035

- 9-tap : 0.90372907227

- 7-tap : 0.809171316279

- 5-tap : 0.7013915463849

17-taps per pass, so basically 289 effective texture samples (actually 34…), and a standard deviation of 1.2?! This is what it looks like, original on right, blurred on left :

So my first thought was, “This can’t be right.”

Good Enough

In my first demo, I used a σ = 2.7 for 9 taps, and it didn’t look that bad.

So this got me thinking, how “boxy” can the Gaussian become for it to still look believable? Or, differently put, how much light can we lose before getting a Box-like filter?

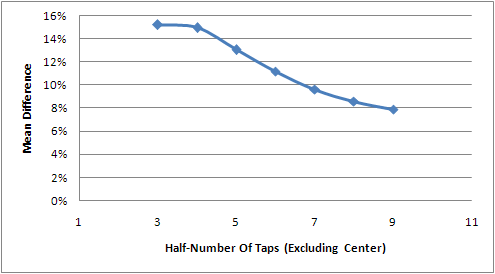

To determine that, I needed a metric, a scale. I decided to use the Mean Difference, a.k.a. Average Deviation.

A Box filter does not vary, it’s constant, so its mean difference is always 0. By calculating how low my Gaussian approximations’ mean difference goes, one can find how similar it really is to a Box filter.

The upper limit (0% box-filter-similarity) would be the mean difference of a filter that loses no light at all, and the lower limit (100% similarity) would be equal to a box filter at (again) 15 significant decimal numbers.

So I fired up Excel and made those calculations. It turns out that at 0% lost light, the mean difference is not linear with the number of taps. In fact, the shape highly resembles a bell curve :

I fitted this curve to a 4th order polynomial (which seemed to fit best in the graph) and at most, I got a 0.74% similarity for 0% lost light, in a 9-tap filter. I’m not looking for something perfect since this metric will be used for visual comparison; the whole process is highly subjective.

Eye Exam

Time to test an implementation and determine how much similarity is too much to this blogger’s eye.

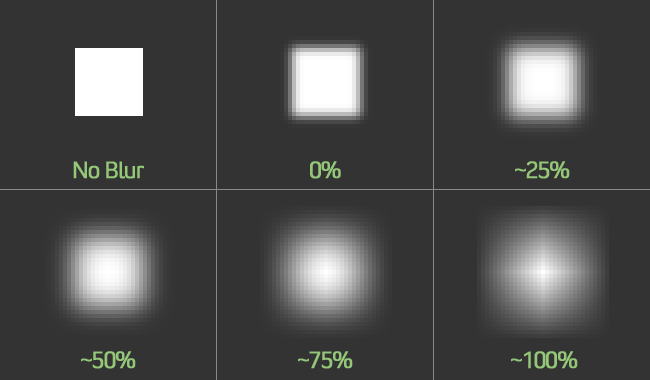

Here’s a fairly tiny 17×17 white square on a dark grey background, blurred with a 17-tap filter of varying σ and blown up 4 times with nearest-neighbour interpolation :

The percentage is my “box-likeness” or “box filter similarity” calculation described in the last section.

Up to 50%, I’m quite pleased with the results. The blurred halo feels very round and neat, with no box limit that cuts off the values harshly. But at 75%, it starts to look seriously boxy. In fact, anything bigger than 60% visibly cuts the blur off. And at 100%, it definitely doesn’t look like a Gaussian, more like a star with a boxy, diamond-shaped blur.

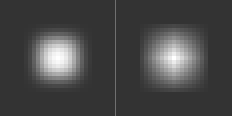

Here’s how 60% and 100% respectively look at a 9-tap, for comparison :

50% looks a liiiiiitle bit rounder, but 60% is still very acceptable and provide a nice blurring capability boost.

Conclusion

My conclusion, like my analysis, is extremely subjective…

I recommend not using more than 60% box-similarity (as calculated with my approach) to keep a good-looking Gaussian blur, and not more than 50% if your scene has very sharp contrasts/angles and you want optimal image quality.

The ideal case of 0% lost light is numerically perfect, but really unpractical in the real world. It feels like a waste of GPU cycles, and I don’t see any reason to limit ourselves to such low σ values.

Here’s a list of 50% and 60% standard deviations for all the tap counts that I consider practical for real-time shaders :

- 17-tap : 3.66 – 4.95

- 15-tap : 2.85 – 3.34

- 13-tap : 2.49 – 2.95

- 11-tap : 2.18 – 2.54

- 9-tap : 1.8 – 2.12

- 7-tap : 1.55 – 1.78

- 5-tap : 1.35 – 1.54

And last but not least, the Excel document (made with 2007 but saved as 2003 format) that I used to make this article : Gaussian Blur.xls

I hope you’ll find this useful! Me, I think I’m done with the Gaussian. :D

If you are concerned about not losing any brightness as a result of applying a Gaussian blur, then you can just normalize your Gaussian kernel weights. To do this, sum up all the weights, then divide each weight by that sum.

Typically this will be a very small adjustment to the weights, so you will not lose the Gaussian blur look, and you can pick from a wide range of sigma values.

@Kris, I’m well aware of this. That was my first attempt, you can see that in my two earlier posts about the Gaussian.

My goal here was to find how many samples were necessary to get a believable Gaussian blur for each sigma value, or the inverse (what’s the appropriate sigma value for a said number of samples).

Reading your XLS file, I see very low weight for the last sample : 0,0000000514 for a 13-tap.

I doubt multiplying this weight by a pixel color can modify the final result.

In a 2-pass shader you have (maximum) 0,0000000514 * 1.0 (extrem left point) + 0,0000000514 * 1.0 (extrem right point), and you store the result into a 8 bit texture where the smallest value is 1/ 255.0 = 0.00392 -> you add nothing at all.

Hey there, old article but I’ll still chime in :)

There is a very low contribution for a sigma (standard deviation) of ~1.06 for 13-tap. You can tweak the sigma value to get a bigger blur factor and more contribution in the farthest sample. I tried to optimize for “box-filter likeness” in this sheet and this article, which is a bit arbitrary and should be taken with a grain of salt.